Responsible AI LLC

My name is Jiahao Chen, and I’m thrilled to announce the formation of Responsible Artificial Intelligence LLC, my new consultancy focused on practical, yet responsible AI solutions for the evolving needs of businesses and regulators that is informed by the very latest academic research.

About this newsletter. The research and practice of responsible AI is evolving rapidly. Every month, new laws are passed, existing regulations are updated to cover AI/ML systems, and new policy and AI research continues to be published at an exponential rate. I’m pleased to offer this newsletter summarizing my reading of the latest developments in academic research, government policy, and community events.

Solicitation. Please get in touch if you’re interested in consultancy services for designing and operating responsible AI solutions, algorithmic auditing services, or expert witness testimony.

Branding note. The logo and cover image were generated with Ben Firschman’s fork of Stable Diffusion, which runs locally on a M1 Mac. Interest in generative models has exploded in this past month, with the open source release of Stable Diffusion (GitHub; HuggingFace) by Stability.AI (CVPR’22, arXiv:2112.10752), and a comprehensive survey preprint of diffusion models (arXiv:2209.00796).

Generative models have shown sensitivity of the output to the specific text, even down to the level of word order and punctuation. Some recent work even proposes that the problem of finding suitable text to input to Stable Diffusion itself is amenable to machine learning, resulting in work on meta-prompting (arXiv:2209.11486; arXiv:2209.11711). My attempts to generate usable images occasionally resulted in rickrolling in the form of still frames from the music video.

At the same time, Stable Diffusion harbors the risk of amplifying NSFW content, stereotypes, and slurs (arXiv:2110.01963). The training data set was subsetted from LAION-5B, consisting of 5.8 billion annotated images. A previous version of this data set, LAION-400M, was found to include not only copyrighted materials, but also problematic content like pornography, sexual violence, racial stereotypes, and ethnic slurs (arXiv:2110.01963). Such problematic content was not removed in LAION-5B, but purports to be tagged as such (NeurIPS’22). It’s unclear if Stable Diffusion’s training subset has such problematic content removed.

☛ Are you part of Stable Diffusion’s training data? Search the LAION-5B data set on haveibeentrained.com. (CW: NSFW, violence)

☛ Have a Mac with Apple Silicon? Try Stable Diffusion on your local machine with Diffusion Bee.

The pseudotext in the logo image is a poorly understood phenomenon studied previously in DALL·E 2 (arXiv:2204.06125, arXiv:2206.00169). What do you think the text means?

This month in responsible AI

It’s impossible for any single person to give a comprehensive review of a rapidly evolving area like responsible AI, with multiple developments in governments worldwide and new research being published every day. Instead, here’s a summary of what I’ve been reading in the past month which may be of interest to readers.

This newsletter may be truncated by your e-mail provider, in which case you can simply read it online.

United Kingdom

The Digital Regulation Cooperation Forum (DRCF) have updated their response to public submissions on their two discussion papers on algorithmic processing and algorithmic auditing. This forum between four different UK regulatory bodies aims to support improvements in algorithmic transparency while enabling innovation in their regulated industries. They continue to welcome feedback on the two discussion papers.

☛ Send the Forum your feedback at drcf.algorithms@cma.gov.uk.

The public comment period for the Bank of England’s consultation paper, Model risk management principles for banks (CP6/22), ends on October 21. In particular, they are interested to know if these prudential regulations sufficiently “identify, manage, monitor, and control the risks associated with AI or ML models” (¶¶ 2.18-20).

☛ Send the Bank of England your feedback at CP6_22@bankofengland.co.uk.

United States

The U.S. Congress released their new beta API (api.congress.gov), enabling developers to retrieve legislative documents like bills and committee reports programmatically, and follow discussions of AI in the current session such as the AI Training Act, the Algorithmic Accountability Act, and the Advancing American AI Innovation Act.

☛ Sign up for a Congress.gov API key here.

[FR] The Federal Communications Commission’s Technical Advisory Committee held a meeting on September 15 which was videorecorded and publicly viewable. Starting at 2:20 of the recording, the AI/ML Working Group presented their vision of AI/ML as deeply imbedded into the new 6G network infrastructure across its entire lifecycle, especially in design (digital twins and resource allocation) and operations (NLP and MLOps). Natural language processing was of particular interest for its implications for existing laws on wiretapping and robocalling.

[FR] Notice of meeting. The National Artificial Intelligence Advisory Committee (NAIAC) will be holding a public meeting on October 12-13 (Stanford; hybrid). The notice claims the agenda will be published on the NAIAC website.

☛ Submit comments and questions by October 5, 5pm PDT to Melissa Banner at melissa.banner@nist.gov.

[FR] Request for Information. The Department of Transportation is seeking input on the use of AI in autonomous vehicles and its implications for pedestrian and bicyclist safety.

☛ Submit comments by October 15 on regulations.gov.

[FR] Request for Comment. The Department of Commerce “is requesting public comments to gain insight on the current global AI market and stakeholder concerns regarding international AI policies, regulations, and other measures which may impact U.S. exports of AI technologies.”

☛ Submit comments by October 17 on regulations.gov.

The U.S. National Institute of Standards and Technology (NIST) has published a second version of its AI risk management framework (pdf), ahead of its third workshop on October 18-19 (virtual; free registration).

☛ Register for the NIST workshop for free here.

[FR] Advance notice of proposed rulemaking. The U.S. Federal Trade Commission (FTC) is requesting “public comment on the prevalence of commercial surveillance and data security practices that harm consumers.” Commissioner Noah Joshua Phillips is particularly concerned with whether AI can amplify disparate outcomes, and if so, if the FTC “should put the kibosh on the development of artificial intelligence.” (emphasis mine)

☛ Submit comments by October 21 on regulations.gov.

New York City’s Department of Consumer and Worker Protection has published its Proposed Rules for compliance with the City’s law on automated employment decision tools (AEDT; Int. No. 1894-2020-A), which comes into effect in the new year. Prominent law firm Debevoise & Plimpton have summarized their reading of the Proposed Rules, highlighting the intersectionality concerns of testing beyond race, gender, and age in isolation, and the remaining gaps such as the limitations of metrics and data availability. DCWP is soliciting public comments ahead the public hearing on October 24 at 11am EDT (Zoom link).

☛ Provide your comments on AEDT to NYC here.

Company events

Disclosure: Responsible AI LLC was not paid to advertise these events.

Credo AI is organizing a Global Responsible AI Summit on October 27 (online), with a speaker list representing a mix of UK and US companies, policymakers and researchers.

Community events

A new pirate-themed Bias Bounty will be announced later this month at CAMLIS’22 (October 21-22 in Arlington, VA; $200 paid registration). The team behind this bias bounty include several people who were previously behind Twitter’s algorithmic bias bounty challenge in 2021.

☛ Sign up for the bias bounty here.

Research papers

Here’s a briefly annotated reading list of new papers and preprints that were published or updated in the past month.

[arXiv:2203.16432; blog; AIES’22] Nil-Jana Akpinar et al., Long-term Dynamics of Fairness Intervention in Connection Recommender Systems. Using an urn model for recommender systems, the authors prove that interventions must be dynamically applied over time to achieve a desired fairness outcome, and that remediation at a single point in time is insufficient in general.

[arXiv:2206.01254; NeurIPS’22; Twitter thread 1 and 2] Tessa Han, Suraj Srinivas, and Himabindu Lakkaraju, Which Explanation Should I Choose? A Function Approximation Perspective to Characterizing Post hoc Explanations. Introduces a “no free lunch”-type theorem which quantifies the ultimate limitation of post hoc explanation methods that attempt to build best local approximations to the decision function.

[arXiv:2206.11104; NeurIPS’22; OpenReview; project site] Chirag Agarwal et al., OpenXAI: Towards a Transparent Evaluation of Model Explanations. This Python package provides a uniform evaluation environment for explanation methods, using metrics based on faithfulness, stability and fairness. They also provide several synthetic data generators for evaluating whether methods correctly capture ground truth, which is almost always unknown in real data sets.

[arXiv:2207.04154; GitHub; Twitter thread] Dylan Slack et al., TalkToModel: Explaining Machine Learning Models with Interactive Natural Language Conversations. Describes a natural language system to query models for explanations of its predictions using plain English, which was favorably received by medical professionals.

[arXiv:2107.07511; Twitter thread] Anastasios N. Angelopoulos and Stephen Bates, A Gentle Introduction to Conformal Prediction and Distribution-Free Uncertainty Quantification. A tutorial to conformal prediction, a method for refining heuristic error bars on the output of AI systems into more statistically rigorous uncertainty bounds. Conformal prediction has seen renewed interest, judging from recent ICLR submissions.

[doi:10.1145/3514094.3534167, AIES’22; preprint; Twitter thread] Max Langenkamp and Daniel N. Yue, How Open Source Machine Learning Software Shapes AI. Estimates that open source software in ML produces $30 billion/year of value, albeit with a steep upfront development cost of $120-180 million/year for TensorFlow and $180-300 million/year for PyTorch, which leaves development of non-deep learning software severely underfunded by comparison. User interviews highlight traditional benefits of open sources, such as community engagement, while noting a convergence in APIs and capabilities.

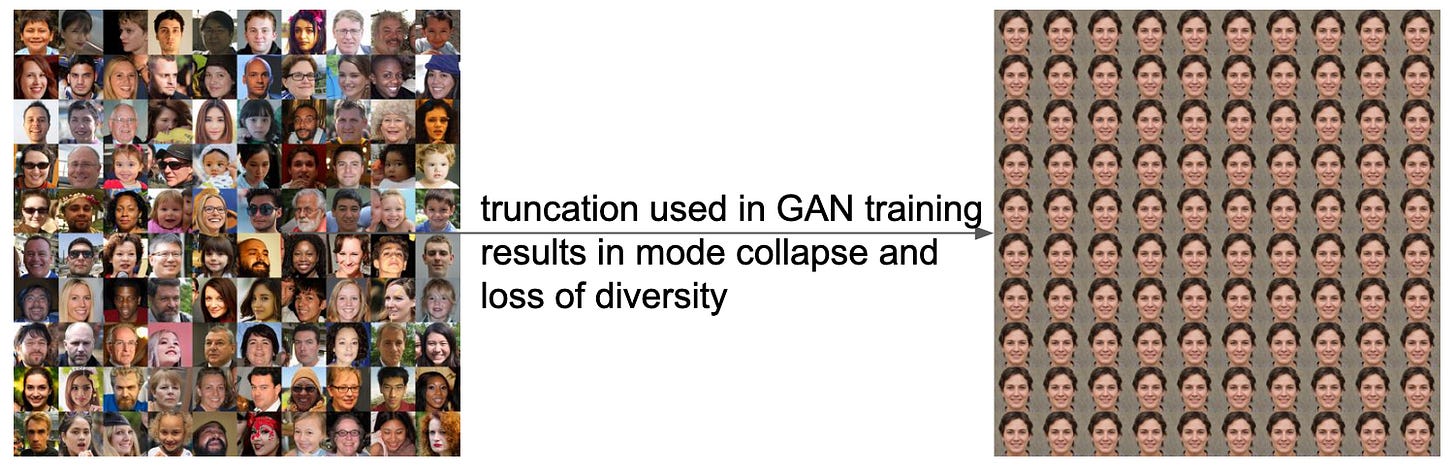

[arXiv:2209.02836; ECCV’22; project page] Vongani H. Maluleke et al., Studying Bias in GANs through the Lens of Race. Shows that truncation, a technique used to control output quality in GANs, reduces racial diversity in generated images by triggering mode collapse. In my opinion, this phenomenon is emblematic of a general issue of diversity loss resulting from compressing a learned representation.

[arXiv:2209.07572; EAAMO’22] Abeba Birhane et al., Power to the People? Opportunities and Challenges for Participatory AI. Reviews mass participation efforts in AI, focusing on case studies of Masakhane (NLP for African languages), Te Hiku Media (NLP data Māori with a community-focused license), and the Data Cards Playbook (for developing data-centric documentation).

[arXiv:2209.09125; Twitter thread] Shreya Shankar et al., Operationalizing Machine Learning: An Interview Study. Highlights the experimental nature of ML development as a recurring pain point in industry, leading to a tension between fast development and deployment on one hand, and validating correctness of results on the other.

[arXiv:2209.09780] David Gray Widder and Dawn Nafus, Dislocated Accountabilities in the AI Supply Chain: Modularity and Developers' Notions of Responsibility. Interviews with 27 AI practitioners reveals that modularity in tech design, while useful for separation of concerns as a best practice for software engineering, results in a disconnect in their accountability for algorithmic harms by reducing visibility of how their AI work is used in the larger system.

[SSRN:4217148] Mehtab Khan and Alex Hanna, The Subjects and Stages of AI Dataset Development: A Framework for Dataset Accountability (September 13, 2022). Comprehensive survey of legal concerns (such as copyright, privacy, and algorithmic harms) that surround the collection of training data for foundational models.

[report] Alec Radford et al., Robust Speech Recognition via Large-Scale Weak Supervision. This report announces OpenAI’s new Transformer-based Whisper model for multilingual transcription and translation. See also the summary Twitter threads here. Preliminary reports on Twitter document some limitations, such as underperformance relative to other ASR models on several metrics, as well as severe transcription errors in under-resourced languages like Bengali and Kannada.

☛ Try out Whisper on your own audio data using Google Colab notebooks such as this one.

☛ Do you have a recommendation for what to read? Comment below to be considered for the next newsletter.

Solicitation. Please get in touch if you’re interested in consultancy services for designing and operating responsible AI solutions, algorithmic auditing services, or expert witness testimony.